For a project I’m working on I’ve got to display data that’s coming from SSRS (SQL Server Reporting Services) on a web page for our users. One of the feedback items from the first round of user testing was that the error messages from the control were not helpful or friendly.

For example, if a user types in a date in an incorrect format the Report Viewer Control would return an error message to the user like this:

The value provided for the report parameter 'pToDate' is not valid for its type. (rsReportParameterTypeMismatch)

The user doesn’t know that “pToDate” is. The nearest possibly alternative on the page reads “To Date” so they could possibly take a good guess, but why risk support calls over something like that? And the “(rsReportParameterTypeMismatch)” is likely to mean even less to a user. Why should they even have to see things like that?

So I set about trying to change that.

In fact the control gives you no way to alter the error messages that it shows. There is a ReportError event that you can subscribe to and indicate you’ve handled the error, but no where to provide a better error message.

With that in mind I thought that what I’d do is create a Label on the page to hold the error message and populate it if an error occurred. However, I found that while I could get the message to display initially, I could not get it to go away once the user had corrected the error.

What I had was this:

ASPX:

<asp:Label runat="server" ID="ReportErrorMessage" Visible="false"

CssClass="report-error-message"></asp:Label>

<rsweb:reportviewer runat="server" ID="TheReport" Font-Names="Verdana"

Width="100%" Height="100%" Font-Size="8pt"

InteractiveDeviceInfos="(Collection)" ProcessingMode="Remote"

InteractivityPostBackMode="AlwaysSynchronous"

WaitMessageFont-Names="Verdana" WaitMessageFont-Size="14pt"

OnReportError="TheReport_ReportError"

OnReportRefresh="TheReport_ReportRefresh">

<serverreport reportpath="/Path/To/The/Report"

reportserverurl="http://the-report-server.com/ReportServer" />

</rsweb:reportviewer>

C#

protected void TheReport_ReportError(object sender, ReportErrorEventArgs e)

{

if (e.Exception.Message.Contains("rsReportParameterTypeMismatch"))

ReportErrorMessage.Text = BuildBadParameterMessage(e);

else

ReportErrorMessage.Text = BuildUnknownErrorMessage(e);

ReportErrorMessage.Visible = true;

e.Handled = true;

}

protected void TheReport_ReportRefresh(object sender, CancelEventArgs e)

{

ReportErrorMessage.Visible = false;

ReportErrorMessage.Text = string.Empty;

}

Somehow or another the initial message was being set, however the changes in TheReport_ReportRefresh were not being applied despite me verifying the code was being run.

I eventually realised that the report viewer control was not performing a full postback, but just a partial postback and that I needed to put the Label control inside an update panel. Like this:

<asp:UpdatePanel runat="server">

<ContentTemplate>

<asp:Label runat="server" ID="ReportErrorMessage" Visible="false"

CssClass="report-error-message"></asp:Label>

</ContentTemplate>

</asp:UpdatePanel>

Once I did that the message appeared and disappears correctly.

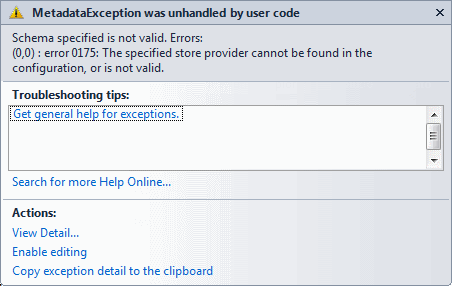

For example, if you have a little application with two projects, an application project (in this case, the imaginatively names ConsoleApplication2) and class library (named DataAccess). An app.config file will be created for the data access project by the entity framework tools in Visual Studio. Normally that can be copied (or just the connection string entries at least) to the app.config (or web.config) of the main application.

For example, if you have a little application with two projects, an application project (in this case, the imaginatively names ConsoleApplication2) and class library (named DataAccess). An app.config file will be created for the data access project by the entity framework tools in Visual Studio. Normally that can be copied (or just the connection string entries at least) to the app.config (or web.config) of the main application. If a ModelEnties folder is created in the DataAccess project and the Products.edmx is moved into the ModelEntities folder then the location of the resource is moved, so the connection string is no longer valid. So, for the change that was just made the connection string needs to be updated to look like this:

If a ModelEnties folder is created in the DataAccess project and the Products.edmx is moved into the ModelEntities folder then the location of the resource is moved, so the connection string is no longer valid. So, for the change that was just made the connection string needs to be updated to look like this: